Tokenization is often explained as a way to digitize assets such as bonds, funds, or real estate. While this description is technically accurate, it misses the more important implication. The real impact of tokenization is not the asset itself, but the way access to markets is changing. Tokenization is reshaping who can participate, how efficiently capital moves, and how markets are structured.

As financial systems become more digital, access has become a greater constraint than asset availability. Many assets already exist in abundance, but participation is limited by settlement friction, minimum sizes, geographic barriers, and operational complexity. Tokenization addresses these constraints by redesigning market access rather than redefining ownership.

Market access is the real bottleneck in modern finance

In traditional markets, access is restricted by layers of intermediaries, operating hours, and jurisdictional rules. Even large institutions face limitations when moving capital across platforms or regions. These frictions slow down capital formation and reduce market efficiency.

Tokenization simplifies access by creating standardized digital representations that can move across systems more easily. This reduces dependency on fragmented infrastructure and allows markets to operate with fewer delays. The asset itself does not change, but the pathway to it does.

By lowering operational barriers, tokenization expands participation. More institutions can engage with the same markets using shared technical standards. Access becomes more uniform, which supports deeper liquidity and more consistent pricing.

Why asset digitization alone is not the goal

Focusing only on digitizing assets risks misunderstanding tokenization’s purpose. Digitization without improved access simply recreates existing inefficiencies in a new format. The value of tokenization lies in how it restructures interaction with markets.

Tokenized instruments can settle faster, integrate with automated processes, and operate across platforms. These features matter because they reduce the cost and complexity of participation. Institutions can deploy capital without navigating bespoke processes for each market.

This shift also reduces reliance on manual reconciliation and batch processing. Markets become more responsive when access is streamlined. Tokenization supports this responsiveness by embedding access logic into the instrument itself.

Tokenization and institutional participation

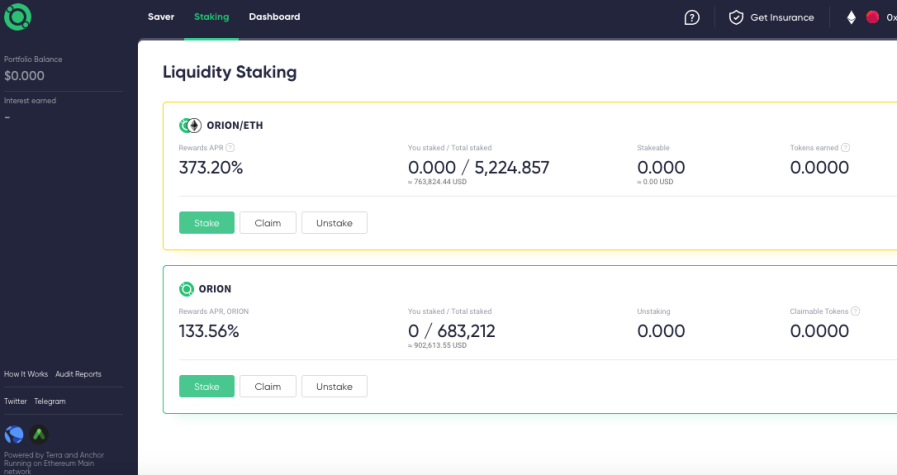

Institutions care deeply about access because it determines scalability. Markets that are difficult to access require more capital, more staff, and more risk management resources. Tokenization reduces these requirements by standardizing how instruments are issued and settled.

This standardization allows institutions to interact with multiple markets through fewer interfaces. It also supports interoperability, enabling assets to move between platforms without complex conversions. Access becomes portable rather than siloed.

As a result, institutions can diversify more efficiently and respond faster to changing conditions. Tokenization supports this flexibility by turning access into a technical feature rather than an operational hurdle.

How tokenization changes liquidity dynamics

Improved access has direct implications for liquidity. When participation increases and settlement becomes faster, liquidity improves naturally. Tokenization enables this by allowing capital to circulate more freely.

Markets with tokenized access can operate closer to continuous settlement. This reduces idle capital and shortens the time between trade and completion. Liquidity becomes more usable rather than merely theoretical.

Importantly, this does not require eliminating existing market structures. Tokenization can coexist with traditional systems while enhancing how they connect. Liquidity benefits emerge from integration rather than replacement.

Regulatory alignment and access focused design

Tokenization’s success depends on regulatory compatibility. Access must expand without undermining oversight. This is why many tokenization efforts focus on permissioned environments and clear governance structures.

Regulators are less concerned with asset format than with market behavior. Tokenization that improves access while maintaining controls aligns with regulatory priorities around transparency and stability.

By framing tokenization as an access solution rather than an asset experiment, institutions and regulators can engage more constructively. The conversation shifts from novelty to practicality.

The long term implications for market structure

Over time, tokenization is likely to influence how markets are organized. Platforms that prioritize access efficiency will attract more participation. Markets may become less defined by geography and more by connectivity.

This evolution supports a more integrated financial system where access is determined by standards rather than location. Tokenization plays a key role by providing the technical foundation for this integration.

The focus on access also explains why tokenization progresses quietly. Its impact is felt through smoother operations rather than dramatic headlines. Markets become more accessible without appearing radically different.

Conclusion

Tokenization is not primarily about creating digital assets. It is about redesigning market access. By reducing friction, improving interoperability, and simplifying participation, tokenization addresses one of the biggest constraints in modern finance. As institutions seek efficiency and scalability, access focused tokenization will continue to shape how markets function, even if the assets themselves look familiar.